The end of Request Validation

Filed under: Development, Security

One of the often overlooked features of ASP.Net applications was request validation. If you are a .Net web developer, you have probably seen this before. I have certainly covered it on multiple occasions on this site. The main goal: help reduce XSS type input from being supplied by the user. .Net Core has opted to not bring this feature along and has dropped it with no hope in sight.

Request Validation Limitations

Request validation was a nice to have.. a small extra layer of protection. It wasn’t fool proof and certainly had more limitations than originally expected. The biggest one was that it only supported the HTML context for cross-site scripting. Basically, it was trying to deter the submission of HTML tags or elements from end users. Cross-site scripting is context sensitive meaning that attribute, URL, Javascript, and CSS based contexts were not considered.

In addition, there have been identified bypasses for request validation over the years. For example, using unicode-wide characters and then storing the data ASCII format could allow bypassing the validation.

Input validation is only a part of the solution for cross-site scripting. The ultimate end-state is the use of output encoding of the data sent back to the browser. Why? Not all data is guaranteed to go through our expected inputs. Remember, we don’t trust the database.

False Sense of Security?

Some have argued that the feature did more harm than good. It created a false sense of security compared to what it could do. While I don’t completely agree with that, I have seen those examples. I have seen developers assume they were protected just because request validation was enabled. Unfortunately, this is a bad assumption based on a mis-understanding of the feature. The truth is that it is not a feature meant to stop all cross-site scripting. The goal was to create a way to provide some default input validation for a specific vulnerability. Maybe it was mis-understood. Maybe it was a great idea with impossible implementation. In either case, it was a small piece of the puzzle.

So What Now?

So moving forward with Core, request validation is out of the picture. There is nothing we can do about that from a framework perspective. Maybe we don’t have to. There may be people that create this same functionality in 3rd party packages. That may work, it may not. Now is our opportunity to make sure we understand the flaws and proper protection mechanisms. When it comes to Cross-site scripting, there are a lot of techniques we can use to reduce the risk. Obviously I rely on output encoding as the biggest and first step. There are also things like content security policy or other response headers that can help add layers of protection. I talk about a few of these options in my new course “Security Fundamentals for Application Teams“.

Remember that understanding your framework is critical in helping reduce security risks in your application. If you are making the switch to .Net core, keep in mind that not all the features you may be used to exist. Understand these changes so you don’t get bit.

Security Tips for Copy/Paste of Code From the Internet

Filed under: Development, Security

Developing applications has long involved using code snippets found through textbooks or on the Internet. Rather than re-invent the wheel, it makes sense to identify existing code that helps solve a problem. It may also help speed up the development time.

Years ago, maybe 12, I remember a co-worker that had a SQL Injection vulnerability in his application. The culprit, code copied from someone else. At the time, I explained that once you copy code into your application it is now your responsibility.

Here, 12 years later, I still see this type of occurrence. Using code snippets directly from the web in the application. In many of these cases there may be some form of security weakness. How often do we, as developers, really analyze and understand all the details of the code that we copy?

Here are a few tips when working with external code brought into your application.

Understand what it does

If you were looking for code snippets, you should have a good idea of what the code will do. Better yet, you probably have an understanding of what you think that code will do. How vigorously do you inspect it to make sure that is all it does. Maybe the code performs the specific task you were set out to complete, but what happens if there are other functions you weren’t even looking for. This may not be as much a concern with very small snippets. However, with larger sections of code, it could coverup other functionality. This doesn’t mean that the functionality is intentionally malicious. But undocumented, unintended functionality may open up risk to the application.

Change any passwords or secrets

Depending on the code that you are searching, there may be secrets within it. For example, encryption routines are common for being grabbed off the Internet. To be complete, they contain hard-coded IVs and keys. These should be changed when imported into your projects to something unique. This could also be the case for code that has passwords or other hard-coded values that may provide access to the system.

As I was writing this, I noticed a post about the RadAsyncUpload control regarding the defaults within it. While this is not code copy/pasted from the Internet, it highlights the need to understand the default configurations and that some values should be changed to help provide better protections.

Look for potential vulnerabilities

In addition to the above concerns, the code may have vulnerabilities in it. Imagine a snippet of code used to select data from a SQL database. What if that code passed your tests of accurately pulling the queries, but uses inline SQL and is vulnerable to SQL Injection. The same could happen for code vulnerable to Cross-Site Scripting or not checking proper authorization.

We have to do a better job of performing code reviews on these external snippets, just as we should be doing it on our custom written internal code. Finding snippets of code that perform our needed functionality can be a huge benefit, but we can’t just assume it is production ready. If you are using this type of code, take the time to understand it and review it for potential issues. Don’t stop at just verifying the functionality. Take steps to vet the code just as you would any other code within your application.

SQL Injection: Calling Stored Procedures Dynamically

Filed under: Development, Security, Testing

It is not news that SQL Injection is possible within a stored procedure. There have been plenty of articles discussing this issues. However, there is a unique way that some developers execute their stored procedures that make them vulnerable to SQL Injection, even when the stored procedure itself is actually safe.

Look at the example below. The code is using a stored procedure, but it is calling the stored procedure using a dynamic statement.

conn.Open();

var cmdText = "exec spGetData '" + txtSearch.Text + "'";

SqlDataAdapter adapter = new SqlDataAdapter(cmdText, conn);

DataSet ds = new DataSet();

adapter.Fill(ds);

conn.Close();

grdResults.DataSource = ds.Tables[0];

grdResults.DataBind();

It doesn’t really matter what is in the stored procedure for this particular example. This is because the stored procedure is not where the injection is going to occur. Instead, the injection occurs when the EXEC statement is concatenated together. The email parameter is being dynamically added in, which we know is bad.

This can be quickly tested by just inserting a single quote (‘) into the search field and viewing the error message returned. It would look something like this:

System.Data.SqlClient.SqlException (0x80131904): Unclosed quotation mark after the character string ”’. at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection, Action`1 wrapCloseInAction) at System.Data.SqlClient.SqlInternalConnection.OnError(SqlException exception, Boolean breakConnection, Action`1 wrapCloseInAction) at System.Data.SqlClient.TdsParser.ThrowExceptionAndWarning(TdsParserStateObject stateObj, Boolean callerHasConnectionLock, Boolean asyncClose) at System.Data.SqlClient.TdsParser.TryRun(RunBehavior runBehavior, SqlCommand cmdHandler, SqlDataReader dataStream, BulkCopySimpleResultSet bulkCopyHandler, TdsParserStateObject stateObj, Boolean& dataReady) at System.Data.SqlClient.SqlDataReader.TryConsumeMetaData() at System.Data.SqlClient.SqlDataReader.get_MetaData() at

With a little more probing, it is possible to get more information leading us to understand how this SQL is constructed. For example, by placing ‘,’ into the search field, we see a different error message:

System.Data.SqlClient.SqlException (0x80131904): Procedure or function spGetData has too many arguments specified. at System.Data.SqlClient.SqlConnection.

The mention of the stored procedure having too many arguments helps identify this technique for calling stored procedures.

With SQL we have the ability to execute more than one query in a given transaction. In this case, we just need to break out of the current exec statement and add our own statement. Remember, this doesn’t effect the execution of the spGetData stored procedure. We are looking at the ability to add new statements to the request.

Lets assume we search for this:

james@test.com’;SELECT * FROM tblUsers–

this would change our cmdText to look like:

exec spGetData’james@test.com’;SELECT * FROM tblUsers–‘

The above query will execute the spGetData stored procedure and then execute the following SELECT statement, ultimately returning 2 result sets. In many cases, this is not that useful for an attacker because the second table would not be returned to the user. However, this doesn’t mean that this makes an attack impossible. Instead, this turns our attacks more towards what we can Do, not what can we receive.

At this point, we are able to execute any commands against the SQL Server that the user has permission too. This could mean executing other stored procedures, dropping or modifying tables, adding records to a table, or even more advanced attacks such as manipulating the underlying operating system. An example might be to do something like this:

james@test.com’;DROP TABLE tblUsers–

If the user has permissions, the server would drop tblUsers, causing a lot of problems.

When calling stored procedures, it should be done using command parameters, rather than dynamically. The following is an example of using proper parameters:

conn.Open();

SqlCommand cmd = new SqlCommand();

cmd.CommandText = "spGetData";

cmd.CommandType = CommandType.StoredProcedure;

cmd.Connection = conn;

cmd.Parameters.AddWithValue("@someData", txtSearch.Text);

SqlDataAdapter adapter = new SqlDataAdapter(cmd);

DataSet ds = new DataSet();

adapter.Fill(ds);

conn.Close();

grdResults.DataSource = ds.Tables[0];

grdResults.DataBind();

The code above adds parameters to the command object, removing the ability to inject into the dynamic code.

It is easy to think that because it is a stored procedure, and the stored procedure may be safe, that we are secure. Unfortunately, simple mistakes like this can lead to a vulnerability. Make sure that you are properly making database calls using parameterized queries. Don’t use dynamic SQL, even if it is to call a stored procedure.

XXE in .Net and XPathDocument

Filed under: Security

XXE, or XML External Entity, is an attack against applications that parse XML. It occurs when XML input contains a reference to an external entity that it wasn’t expected to have access to. Through this article, I will discuss how .Net handles XML for certain objects and how to properly configure these objects to block XXE attacks. It is important to understand that the different versions of the .Net framework handle this differently.

I will cover the XPathDocument object in this article. For details regarding XmlReader, XmlTextReader, and XmlDocument check out my original XXE and .Net article.

This article uses 2 files to demonstrate XXE which are described next.

Test.xml – This file is used as the test xml loaded into the application. It consists of the following:

<?xml version=”1.0″ ?>

<!DOCTYPE doc [<!ENTITY win SYSTEM “file:///C:/Users/user/Documents/testdata.txt”>]>

<doc>&win;</doc>

testData.txt – This file contains one simple string that we are attempting to import into our XML file: “This is test data for xml”

XPathDocument

There are multiple ways to instantiate an XPathDocument object to parse XML. Lets take a look at a few of these methods.

Streams

You can create an XPathDocument by passing in a stream object. The following code snippet shows how this would look:

static void LoadXPathDocStream()

{

string fileName = @"C:\Users\user\Documents\test.xml";

StreamReader sr = new StreamReader(fileName);

XPathDocument xmlDoc = new XPathDocument(sr);

XPathNavigator nav = xmlDoc.CreateNavigator();

Console.WriteLine("Stream: " + nav.InnerXml.ToString());

}

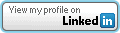

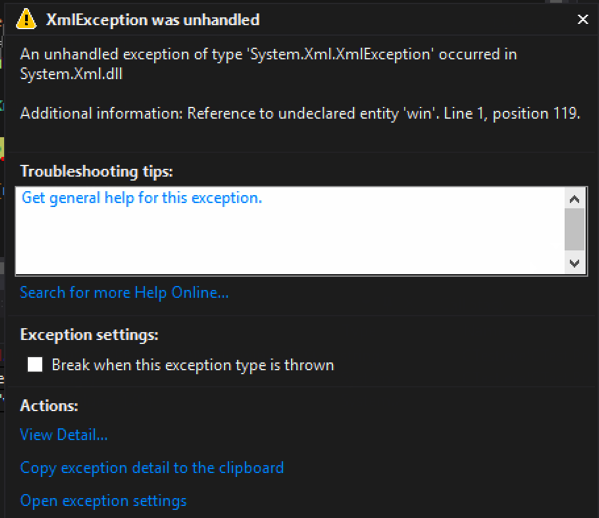

The code above then uses a XPathNavigator object to then read the data from the document. Previous to .Net 4.5.2, the code above is vulnerable to XXE by default. The following image shows there response when executed:

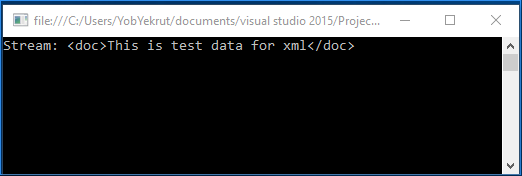

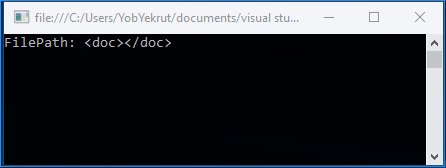

In .Net 4.5.2 and 4.6, this same call has been modified to be safe by default. The following image shows the same code targeting 4.5.2 and notice that the output no longer returns the test data.

File Path

Another option to load an XPathDocument is by passing in a path to the file. The following code snippet shows how this is done:

static void LoadXPathDocFile()

{

string fileName = @"C:\Users\user\Documents\test.xml";

XPathDocument xmlDoc = new XPathDocument(fileName);

XPathNavigator nav = xmlDoc.CreateNavigator();

Console.WriteLine("FilePath: " + nav.InnerXml.ToString());

}

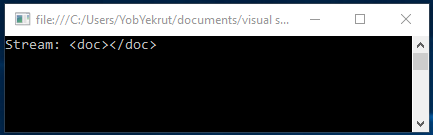

In this example, the XPathDocument constructor takes a filename directly to access the xml. Just like the use of the stream above, in versions prior to 4.5.2, this is vulnerable by default. The following shows the output when targeting .Net 4.5.1:

In .Net 4.5.2 and 4.6, this same call has been modified to be safe by default. The following image shows the output is no longer returning the test data.

XmlReader

Ad mentioned above, XmlReader was covered in the previous post (https://www.jardinesoftware.net/2016/05/26/xxe-and-net/). I wanted to mention it here because just like in the previous post, the method is secure by default. The following example shows using an XmlReader to create the XPathDocument:

static void LoadXPathDocFromReader()

{

string fileName = @"C:\Users\user\Documents\test.xml";

XmlReader myReader = XmlReader.Create(fileName);

XPathDocument xmlDoc = new XPathDocument(myReader);

XPathNavigator nav = xmlDoc.CreateNavigator();

Console.WriteLine("XmlReader: " + nav.InnerXml.ToString());

}

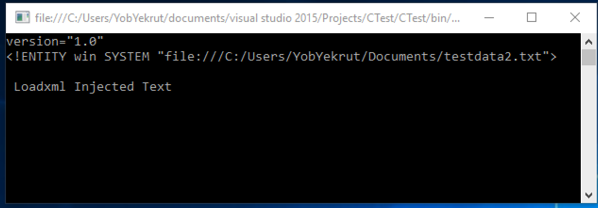

When executed, the reader defaults to not parsing DTDs and will throw an exception. This can be modified by setting the XMlReaderSettings to allow DTD parsing.

Wrap Up

Looking at the examples above, it appears the safest route is to use the XmlReader to create the XPathDocument. The other two methods are insecure by default (in versions 4.5.1 and earlier) and I didn’t see any easy way to make them secure by the properties they had available.

XXE and .Net

XXE, or XML External Entity, is an attack against applications that parse XML. It occurs when XML input contains a reference to an external entity that it wasn’t expected to have access to. Through this article, I will discuss how .Net handles XML for certain objects and how to properly configure these objects to block XXE attacks. It is important to understand that the different versions of the .Net framework handle this differently. I will point out the differences for each object.

I will cover the XmlReader, XmlTextReader, and XMLDocument. Here is a quick summary regarding the default settings:

| Object | Safe by Default? |

|---|---|

| XmlReader | |

| Prior to 4.0 | Yes |

| 4.0 + | Yes |

| XmlTextReader | |

| Prior to 4.0 | No |

| 4.0 + | No |

| XmlDocument | |

| 4.5 and Earlier | No |

| 4.6 | Yes |

XMLReader

Prior to 4.0

The ProhibitDtd property is used to determine if a DTD will be parsed.

- True (default) – throws an exception if a DTD is identified. (See Figure 1)

- False – Allows parsing the DTD. (Potentially Vulnerable)

Code that throws an exception when a DTD is processed: – By default, ProhibitDtd is set to true and will throw an exception when an Entity is referenced.

static void Reader()

{

string xml = "<?xml version=\"1.0\" ?><!DOCTYPE doc

[<!ENTITY win SYSTEM \"file:///C:/Users/user/Documents/testdata2.txt\">]

><doc>&win;</doc>";

XmlReader myReader = XmlReader.Create(new StringReader(xml));

while (myReader.Read())

{

Console.WriteLine(myReader.Value);

}

Console.ReadLine();

}

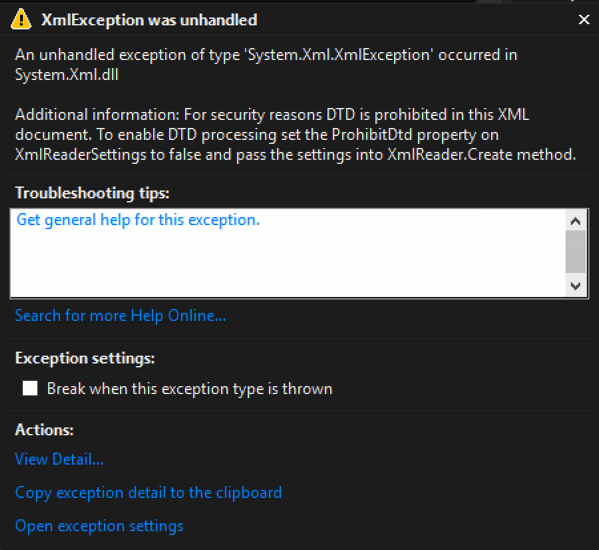

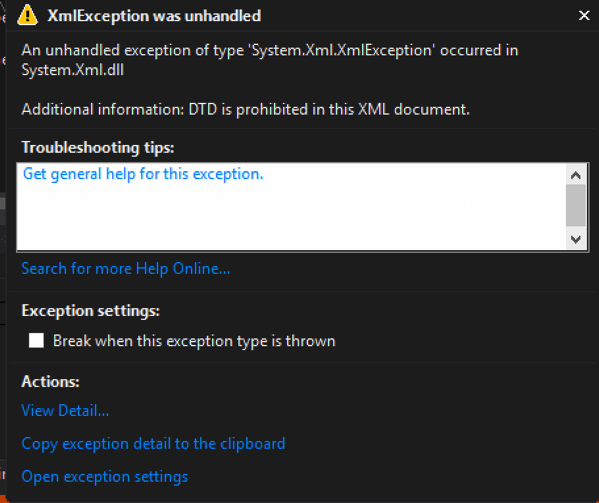

Exception when executed:

[Figure 1]

Code that allows a DTD to be processed: – Using the XmlReaderSettings object, it is possible to allow the parsing of the entity. This could make your application vulnerable to XXE.

static void Reader()

{

string xml = "<?xml version=\"1.0\" ?><!DOCTYPE doc

[<!ENTITY win SYSTEM \"file:///C:/Users/user/Documents/testdata2.txt\">]

><doc>&win;</doc>";

XmlReaderSettings rs = new XmlReaderSettings();

rs.ProhibitDtd = false;

XmlReader myReader = XmlReader.Create(new StringReader(xml),rs);

while (myReader.Read())

{

Console.WriteLine(myReader.Value);

}

Console.ReadLine();

}

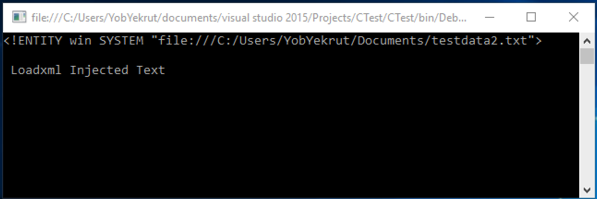

Output when executed showing injected text:

[Figure 2]

.Net 4.0+

In .Net 4.0, they made a change from using the ProhibitDtD property to the new DtdProcessing enumeration. There are now three (3) options:

- Prohibit (default) – Throws an exception if a DTD is identified.

- Ignore – Ignores any DTD specifications in the document, skipping over them and continues processing the document.

- Parse – Will parse any DTD specifications in the document. (Potentially Vulnerable)

Code that throws an exception when a DTD is processed: – By default, the DtdProcessing is set to Prohibit, blocking any external entities and creating safe code.

static void Reader()

{

string xml = "<?xml version=\"1.0\" ?><!DOCTYPE doc

[<!ENTITY win SYSTEM \"file:///C:/Users/user/Documents/testdata2.txt\">]

><doc>&win;</doc>";

XmlReader myReader = XmlReader.Create(new StringReader(xml));

while (myReader.Read())

{

Console.WriteLine(myReader.Value);

}

Console.ReadLine();

}

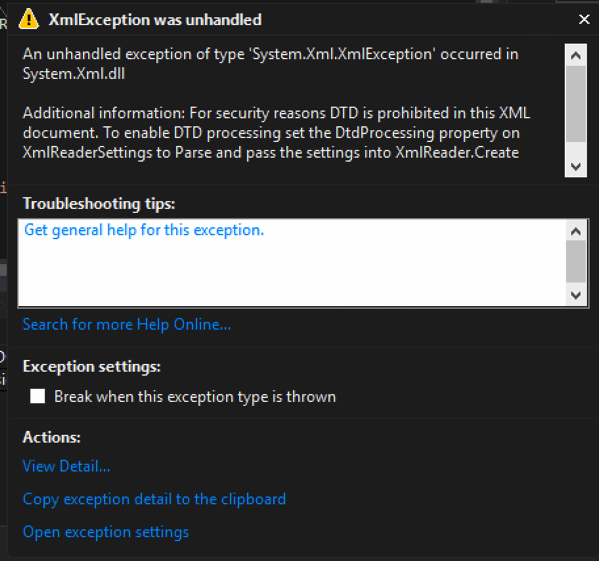

Exception when executed:

[Figure 3]

Code that ignores DTDs and continues processing: – Using the XmlReaderSettings object, setting DtdProcessing to Ignore will skip processing any entities. In this case, it threw an exception because there was a reference to the entirety that was skipped.

static void Reader()

{

string xml = "<?xml version=\"1.0\" ?><!DOCTYPE doc

[<!ENTITY win SYSTEM \"file:///C:/Users/user/Documents/testdata2.txt\">]

><doc>&win;</doc>";

XmlReaderSettings rs = new XmlReaderSettings();

rs.DtdProcessing = DtdProcessing.Ignore;

XmlReader myReader = XmlReader.Create(new StringReader(xml),rs);

while (myReader.Read())

{

Console.WriteLine(myReader.Value);

}

Console.ReadLine();

}

Output when executed ignoring the DTD (Exception due to trying to use the unprocessed entity):

[Figure 4]

Code that allows a DTD to be processed: Using the XmlReaderSettings object, setting DtdProcessing to Parse will allow processing the entities. This potentially makes your code vulnerable.

static void Reader()

{

string xml = "<?xml version=\"1.0\" ?><!DOCTYPE doc

[<!ENTITY win SYSTEM \"file:///C:/Users/user/Documents/testdata2.txt\">]

><doc>&win;</doc>";

XmlReaderSettings rs = new XmlReaderSettings();

rs.DtdProcessing = DtdProcessing.Parse;

XmlReader myReader = XmlReader.Create(new StringReader(xml),rs);

while (myReader.Read())

{

Console.WriteLine(myReader.Value);

}

Console.ReadLine();

}

Output when executed showing injected text:

[Figure 5]

XmlTextReader

The XmlTextReader uses the same properties as the XmlReader object, however there is one big difference. The XmlTextReader defaults to parsing XML Entities so you need to explicitly tell it not too.

Prior to 4.0

The ProhibitDtd property is used to determine if a DTD will be parsed.

- True – throws an exception if a DTD is identified. (See Figure 1)

- False (Default) – Allows parsing the DTD. (Potentially Vulnerable)

Code that allows a Dtd to be processed: (Potentially Vulnerable) – By default, the XMLTextReader sets the ProhibitDtd property to False, allowing entities to be parsed and the code to potentially be vulnerable.

static void TextReader()

{

string xml = "<?xml version=\"1.0\" ?><!DOCTYPE doc

[<!ENTITY win SYSTEM \"file:///C:/Users/user/Documents/testdata2.txt\">]

><doc>&win;</doc>";

XmlTextReader myReader = new XmlTextReader(new StringReader(xml));

while (myReader.Read())

{

if (myReader.NodeType == XmlNodeType.Element)

{

Console.WriteLine(myReader.ReadElementContentAsString());

}

}

Console.ReadLine();

}

Code that blocks the Dtd from being parsed and throws an exception: – Setting the ProhibitDtd property to true (explicitly) will block Dtds from being processed making the code safe from XXE. Notice how the XmlTextReader has the ProhibitDtd property directly, it doesn’t have to use the XmlReaderSettings object.

static void TextReader()

{

string xml = "<?xml version=\"1.0\" ?><!DOCTYPE doc

[<!ENTITY win SYSTEM \"file:///C:/Users/user/Documents/testdata2.txt\">]

><doc>&win;</doc>";

XmlTextReader myReader = new XmlTextReader(new StringReader(xml));

myReader.ProhibitDtd = true;

while (myReader.Read())

{

if (myReader.NodeType == XmlNodeType.Element)

{

Console.WriteLine(myReader.ReadElementContentAsString());

}

}

Console.ReadLine();

}

4.0+

In .Net 4.0, they made a change from using the ProhibitDtD property to the new DtdProcessing enumeration. There are now three (3) options:

- Prohibit – Throws an exception if a DTD is identified.

- Ignore – Ignores any DTD specifications in the document, skipping over them and continues processing the document.

- Parse (Default) – Will parse any DTD specifications in the document. (Potentially Vulnerable)

Code that allows a DTD to be processed: (Vulnerable) – By default, the XMLTextReader sets the DtdProcessing to Parse, making the code potentially vulnerable to XXE.

static void TextReader()

{

string xml = "<?xml version=\"1.0\" ?><!DOCTYPE doc

[<!ENTITY win SYSTEM \"file:///C:/Users/user/Documents/testdata2.txt\">]

><doc>&win;</doc>";

XmlTextReader myReader = new XmlTextReader(new StringReader(xml));

while (myReader.Read())

{

if (myReader.NodeType == XmlNodeType.Element)

{

Console.WriteLine(myReader.ReadElementContentAsString());

}

}

Console.ReadLine();

}

Code that blocks the Dtd from being parsed: – To block entities from being parsed, you must explicitly set the DtdProcessing property to Prohibit or Ignore. Note that this is set directly on the XmlTextReader and not through the XmlReaderSettings object.

static void TextReader()

{

string xml = "<?xml version=\"1.0\" ?><!DOCTYPE doc

[<!ENTITY win SYSTEM \"file:///C:/Users/user/Documents/testdata2.txt\">]

><doc>&win;</doc>";

XmlTextReader myReader = new XmlTextReader(new StringReader(xml));

myReader.DtdProcessing = DtdProcessing.Prohibit;

while (myReader.Read())

{

if (myReader.NodeType == XmlNodeType.Element)

{

Console.WriteLine(myReader.ReadElementContentAsString());

}

}

Console.ReadLine();

}

Output when Dtd is prohibited:

[Figure 6]

XMLDocument

For the XMLDocument, you need to change the default XMLResolver object to prohibit a Dtd from being parsed.

.Net 4.5 and Earlier

By default, the XMLDocument sets the URLResolver which will parse Dtds included in the XML document. To prohibit this, set the XmlResolver = null.

Code that does not set the XmlResolver properly (potentially vulnerable) – The default XMLResolver will parse entities, making the following code potentially vulnerable.

static void Load()

{

string fileName = @"C:\Users\user\Documents\test.xml";

XmlDocument xmlDoc = new XmlDocument();

xmlDoc.Load(fileName);

Console.WriteLine(xmlDoc.InnerText);

Console.ReadLine();

}

Code that does set the XmlResolver to null, blocking any Dtds from executing: – To block entities from being parsed, you must explicitly set the XmlResolver to null. This example uses LoadXml instead of Load, but they both work the same in this case.

static void LoadXML()

{

string xml = "<?xml version=\"1.0\" ?><!DOCTYPE doc

[<!ENTITY win SYSTEM \"file:///C:/Users/user/Documents/testdata2.txt\">]

><doc>&win;</doc>";

XmlDocument xmlDoc = new XmlDocument();

xmlDoc.XmlResolver = null;

xmlDoc.LoadXml(xml);

Console.WriteLine(xmlDoc.InnerText);

Console.ReadLine();

}

.Net 4.6

It appears that in .Net 4.6, the XMLResolver is defaulted to Null, making the XmlDocument safe. However, you can still set the XmlResolver in a similar way as prior to 4.6 (see previous code snippet).

Does the End of an Iteration Change Your View of Risk?

Filed under: Development, Security, Testing

You have been working hard for the past few weeks or months on the latest round of features for your flagship product. You are excited. The team is excited. Then a security test identifies a vulnerability. Balloons deflate and everyone starts to scramble.

Take a breath.

Not all vulnerabilities are created equal and the risk that each presents is vastly different. The organization should already have a process for triaging security findings. That process should be assessing the risk of the finding to determine its impact on the application, organization, and your customers. Some of these flaws will need immediate attention. Some may require holding up the release. Some may pose a lower risk and can wait.

Take the time to analyze the situation.

If an item is severe and poses great risk, by all means, stop what you are doing and fix it. But, what happens when the risk is fairly low. When I say risk, I include in that the ability for it to be exploited. The difficulty to exploit can be a critical factor in what decision you make.

When does the risk of remediation override the risk of waiting until the next iteration?

There are some instances where the risk to remediate so late in the iteration may actually be higher than waiting until the next iteration to resolve the actual issue. But all security vulnerabilities need to be fixed, you say? This is not an attempt to get out of doing work or not resolve issues. However, I believe there are situations where the risk of the exploit is less than the risk of trying to fix it in a chaotic, last minute manner.

I can’t count the number of times I have seen issues arise that appeared to be simple fixes. The bug was not very serious and could only be exploited in a very limited way. For example, the bug required the user’s machine to be compromised to enable exploitation. The fix, however, ended up taking more than a week due to some complications. When the bug appeared 2 days before code freeze there were many discussions on performing a fix, and potentially holding up the release, and moving the remediation to the next iteration.

When we take the time to analyze the risk and exposure of the finding, it is possible to make an educated decision as to which risk is better for the organization and the customers. In this situation, the assumption is that the user’s system would need to be compromised for the exploit to happen. If that is true, the application is already vulnerable to password sniffing or other attacks that would make this specific exploit a waste of time.

Forcing a fix at this point in the game increases the chances of introducing another vulnerability, possibly more severe than the one that we are trying to fix. Was that risk worth it?

Timing can have an affect on our judgement when it comes to resolving security issues. It should not be used as an escape goat or reason not to fix things. When analyzing the risk of an item, make sure you are also considering how that may affect the environment as a whole. This risk may not be directly with the flaw, but indirectly due to how it is fixed. There is no hard and fast rule, exactly the reason why we use a risk based approach.

Engage your information security office or enterprise risk teams to help with the analysis. They may be able to provide a different point of view or insight you may have overlooked.

Open Redirect – Bad Implementation

I was recently looking through some code and happen to stumble across some logic that is attempting to prohibit the application from redirecting to an external site. While this sounds like a pretty simple task, it is common to see it incorrectly implemented. Lets look at the check that is being performed.

string url = Request.QueryString["returnUrl"];

if (string.IsNullOrWhiteSpace(url) || !url.StartsWith("/"))

{

Response.Redirect("~/default.aspx");

}

else

{

Response.Redirect(url);

}

The first thing I noticed was the line that checks to see if the url starts with a “/” characters. This is a common mistake when developers try to stop open redirection. The assumption is that to redirect to an external site one would need the protocol. For example, http://www.developsec.com. By forcing the url to start with the “/” character it is impossible to get the “http:” in there. Unfortunately, it is also possible to use //www.developsec.com as the url and it will also be interpreted as an absolute url. In the example above, by passing in returnUrl=//www.developsec.com the code will see the starting “/” character and allow the redirect. The browser would interpret the “//” as absolute and navigate to www.developsec.com.

After putting a quick test case together, I quickly proved out the point and was successful in bypassing this logic to enable a redirect to external sites.

Checking for Absolute or Relative Paths

ASP.Net has build in procedures for determining if a path is relative or absolute. The following code shows one way of doing this.

string url = Request.QueryString["returnUrl"];

Uri result;

bool isAbsolute = false;

isAbsolute = Uri.TryCreate(returnUrl, UriKind.Absolute, out result);

if (!isAbsolute)

{

Response.Redirect(url);

}

else

{

Response.Redirect("~/default.aspx");

}

In the above example, if the URL is absolute (starts with a protocol, http/https, or starts with “//”) it will just redirect to the default page. If the url is not absolute, but relative, it will redirect to the url passed in.

While doing some research I came across a recommendation to use the following:

if (Uri.IsWellFormedUriString(returnUrl,UriKind.Relative))

When using the above logic, it flagged //www.developsec.com as a relative path which would not be what we are looking for. The previous logic correctly identified this as an absolute url. There may be other methods of doing this and MVC provides some other functions as well that we will cover in a different post.

Conclusion

Make sure that you have a solid understanding of the problem and the different ways it works. It is easy to overlook some of these different techniques. There is a lot to learn, and we should be learning every day.

.Net EnableHeaderChecking

How often do you take untrusted input and insert it into response headers? This could be in a custom header or in the value of a cookie. Untrusted user data is always a concern when it comes to the security side of application development and response headers are no exception. This is referred to as Response Splitting or HTTP Header Injection.

Like Cross Site Scripting (XSS), HTTP Header Injection is an attack that results from untrusted data being used in a response. In this case, it is in a response header which means that the context is what we need to focus on. Remember that in XSS, context is very important as it defines the characters that are potentially dangerous. In the HTML context, characters like < and > are very dangerous. In Header Injection the greatest concern is over the carriage return (%0D or \r) and new line (%0A or \n) characters, or CRLF. Response headers are separated by CRLF, indicating that if you can insert a CRLF then you can start creating your own headers or even page content.

Manipulating the headers may allow you to redirect the user to a different page, perform cross-site scripting attacks, or even rewrite the page. While commonly overlooked, this is a very dangerous flaw.

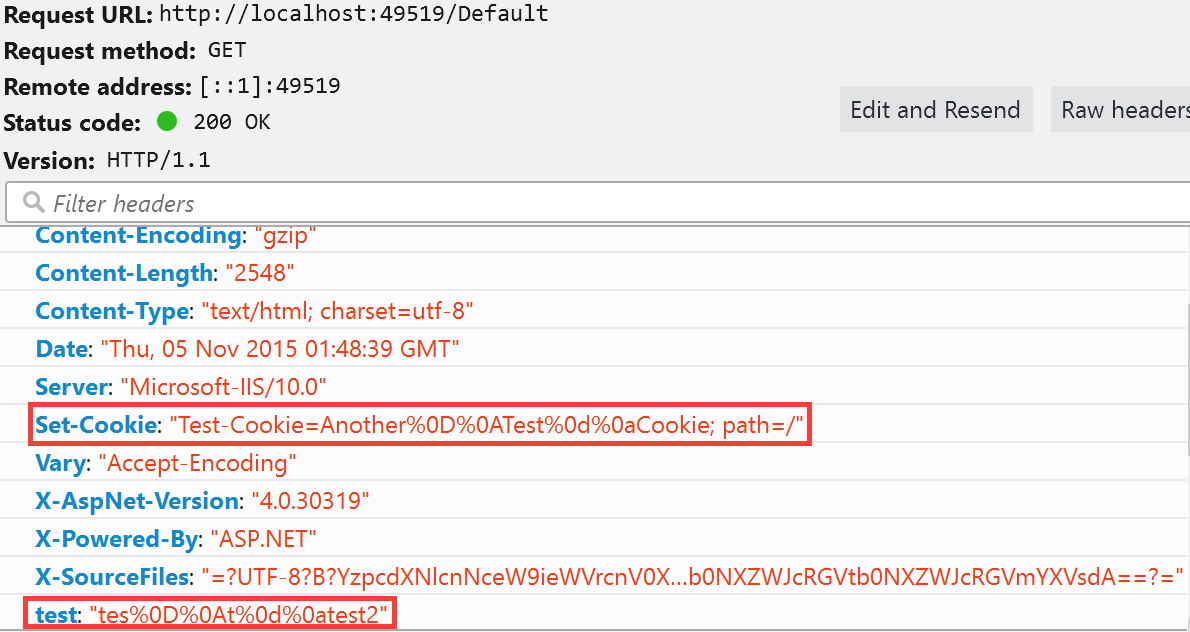

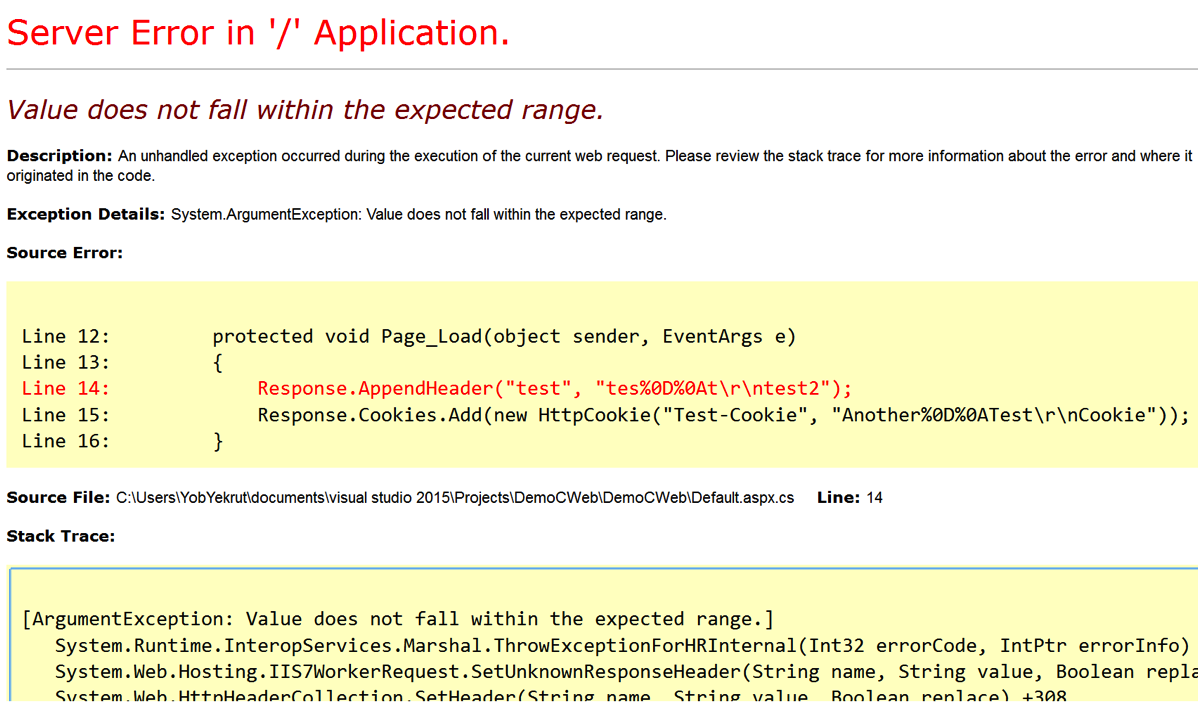

ASP.Net has a built in defense mechanism that is enabled by default called EnableHeaderChecking. When EnableHeaderChecking is enabled, CRLF characters are converted to %0D%0A and the browser does not recognize it as a new line. Instead, it is just displayed as characters, not actually creating a line break. The following code snippet was created to show how the response headers look when adding CRLF into a header.

public partial class _Default : Page

{

protected void Page_Load(object sender, EventArgs e)

{

Response.AppendHeader("test", "tes%0D%0At\r\ntest2");

Response.Cookies.Add(new HttpCookie("Test-Cookie", "Another%0D%0ATest\r\nCookie"));

}

}

When the application runs, it will properly encode the CRLF as shown in the image below.

My next step was to disable EnableHeaderChecking in the web.config file.

<httpRuntime targetFramework="4.6" enableHeaderChecking="false"/>

My expectation was that I would get a response that allowed the CRLF and would show me a line break in the response headers. To my surprise, I got the error message below:

So why the error? After doing a little Googling I found an article about ASP.Net 2.0 Breaking Changes on IIS 7.0. The item of interest is “13. IIS always rejects new lines in response headers (even if ASP.NET enableHeaderChecking is set to false)”

I didn’t realize this change had been implemented, but apparently, if using IIS 7.0 or above, ASP.Net won’t allow newline characters in a response header. This is actually good news as there is very little reason to allow new lines in a response header when if that was required, just create a new header. This is a great mitigation and with the default configuration helps protect ASP.Net applications from Response Splitting and HTTP Header Injection attacks.

Understanding the framework that you use and the server it runs on is critical in fully understanding your security risks. These built in features can really help protect an application from security risks. Happy and Secure coding!

I did a podcast on this topic which you can find on the DevelopSec podcast.

Potentially Dangerous Request.Path Value was Detected…

Filed under: Development, Security

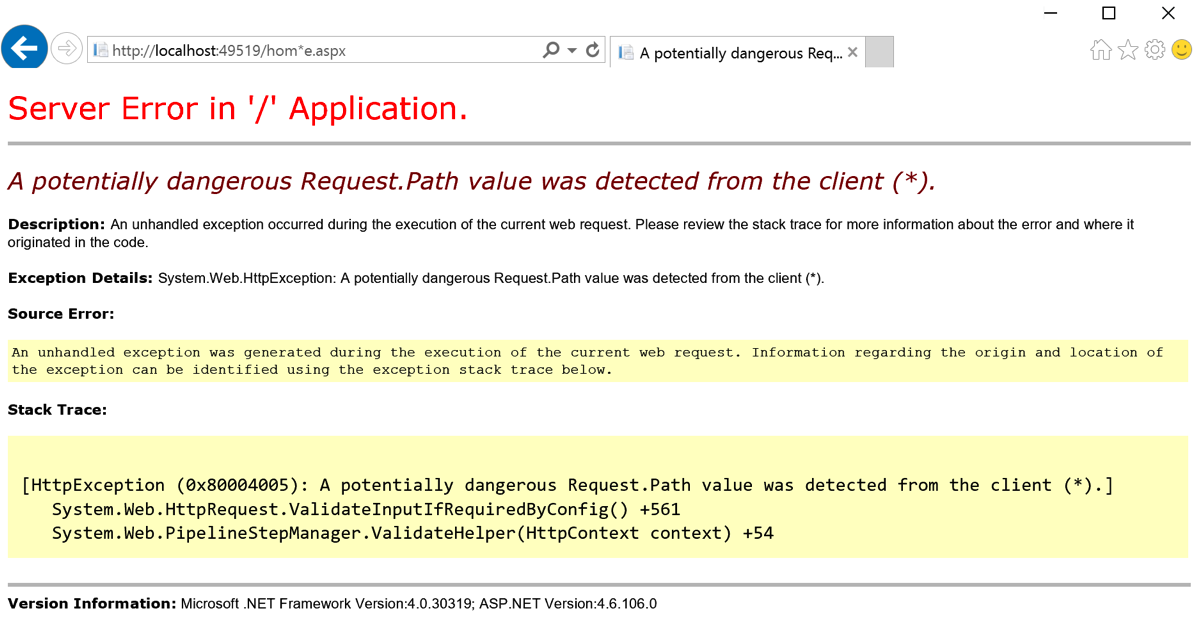

I have discussed request validation many times when we see the potentially dangerous input error message when viewing a web page. Another interesting protection in ASP.Net is the built-in, on by default, Request.Path validation that occurs. Have you ever seen the error below when using or testing your application?

The screen above occurred because I placed the (*) character in the URL. In ASP.Net, there is a default set of defined illegal characters in the URL. This list is defined by RequestPathInvalidCharacters and can be configured in the web.config file. By default, the following characters are blocked:

- <

- >

- *

- %

- &

- :

- \\

It is important to note that these characters are blocked from being included in the URL, this does not include the protocol specification or the query string. That should be obvious since the query string uses the & character to separate parameters.

There are not many cases where your URL needs to use any of these default characters, but if there is a need to allow a specific character, you can override the default list. The override is done in the web.config file. The below snippet shows setting the new values (removing the < and > characters:

<httpruntime requestPathInvalidCharacters="*,%,&,:,\\"/>

Be aware that due the the web.config file being an xml file, you need to escape the < and > characters and set them as < and > respectively.

Remember that modifying the default security settings can expose your application to a greater security risk. Make sure you understand the risk of making these modifications before you perform them. It should be a rare occurrence to require a change to this default list. Understanding the platform is critical to understanding what is and is not being protected by default.

Securing The .Net Cookies

Filed under: Development, Security

I remember years ago when we talked about cookie poisoning, the act of modifying cookies to get the application to act differently. An example was the classic cookie used to indicate a user’s role in the system. Often times it would contain 1 for Admin or 2 for Manager, etc. Change the cookie value and all of a sudden you were the new admin on the block. You really don’t hear the phrase cookie poisoning anymore, I guess it was too dark.

There are still security risks around the cookies that we use in our application. I want to highlight 2 key attributes that help protect the cookies for your .Net application: Secure and httpOnly.

Secure Flag

The secure flag tells the browser that the cookie should only be sent to the server if the connection is using the HTTPS protocol. Ultimately this is indicating that the cookie must be sent over an encrypted channel, rather than over HTTP which is plain text.

HttpOnly Flag

The httpOnly flag tells the browser that the cookie should only be accessed to be sent to the server with a request, not by client-side scripts like JavaScript. This attribute helps protect the cookie from being stolen through cross-site scripting flaws.

Setting The Attributes

There are multiple ways to set these attributes of a cookie. Things get a little confusing when talking about session cookies or the forms authentication cookie, but I will cover that as I go. The easiest way to set these flags for all developer created cookies is through the web.config file. The following snippet shows the httpCookies element in the web.config.

<system.web>

<authentication mode="None" />

<compilation targetframework="4.6" debug="true" />

<httpruntime targetframework="4.6" />

<httpcookies httponlycookies="true" requiressl="true" />

</system.web>

As you can see, you can set httponlycookies to true to se the httpOnly flag on all of the cookies. In addition, the requiressl setting sets the secure flag on all of the cookies with a few exceptions.

Some Exceptions

I stated earlier there are a few exceptions to the cookie configuration. The first I will discuss is the session cookie. The session cookie in ASP.Net is defaulted/hard-coded to set the httpOnly attribute. This should override any value set in the httpCookies element in the web.config. The session cookie does not default to requireSSL and setting that value in the httpCookies element as shown above should work just find for it.

The forms authentication cookie is another exception to the rules. Like the session cookie, it is hard-coded to httpOnly. The Forms element of the web.config has a requireSSL attribute that will override what is found in the httpCookies element. Simply put, if you don’t set requiressl=’true’ in the Forms element then the cookie will not have the secure flag even if requiressl=’true’ in the httpCookies element.

This is actually a good thing, even though it might not seem so yet. Here is the next thing about that Forms requireSSL setting.. When you set it, it will require that the web server is using a secure connection. Seems like common sense, but imagine a web farm where the load balancers offload SSL. In this case, while your web app uses HTTPS from client to server, in reality, the HTTPS stops at the load balancer and is then HTTP to the web server. This will throw an exception in your application.

I am not sure why Microsoft decided to make the decision to actually check this value, since the secure flag is a direction for the browser not the server. If you are in this situation you can still set the secure flag, you just need to do it a little differently. One option is to use your load balancer to set the flag when it sends any responses. Not all devices may support this so check with your vendor. The other option is to programmatically set the flag right before the response is sent to the user. The basic process is to find the cookie and just sent the .Secure property to ‘True’.

Final Thoughts

While there are other security concerns around cookies, I see the secure and httpOnly flag commonly misconfigured. While it does not seem like much, these flags go a long way to helping protect your application. ASP.Net has done some tricky configuration of how this works depending on the cookie, so hopefully this helps sort some of it out. If you have questions, please don’t hesitate to contact me. I will be putting together something a little more formal to hopefully clear this up a bit more in the near future.