.Net EnableHeaderChecking

How often do you take untrusted input and insert it into response headers? This could be in a custom header or in the value of a cookie. Untrusted user data is always a concern when it comes to the security side of application development and response headers are no exception. This is referred to as Response Splitting or HTTP Header Injection.

Like Cross Site Scripting (XSS), HTTP Header Injection is an attack that results from untrusted data being used in a response. In this case, it is in a response header which means that the context is what we need to focus on. Remember that in XSS, context is very important as it defines the characters that are potentially dangerous. In the HTML context, characters like < and > are very dangerous. In Header Injection the greatest concern is over the carriage return (%0D or \r) and new line (%0A or \n) characters, or CRLF. Response headers are separated by CRLF, indicating that if you can insert a CRLF then you can start creating your own headers or even page content.

Manipulating the headers may allow you to redirect the user to a different page, perform cross-site scripting attacks, or even rewrite the page. While commonly overlooked, this is a very dangerous flaw.

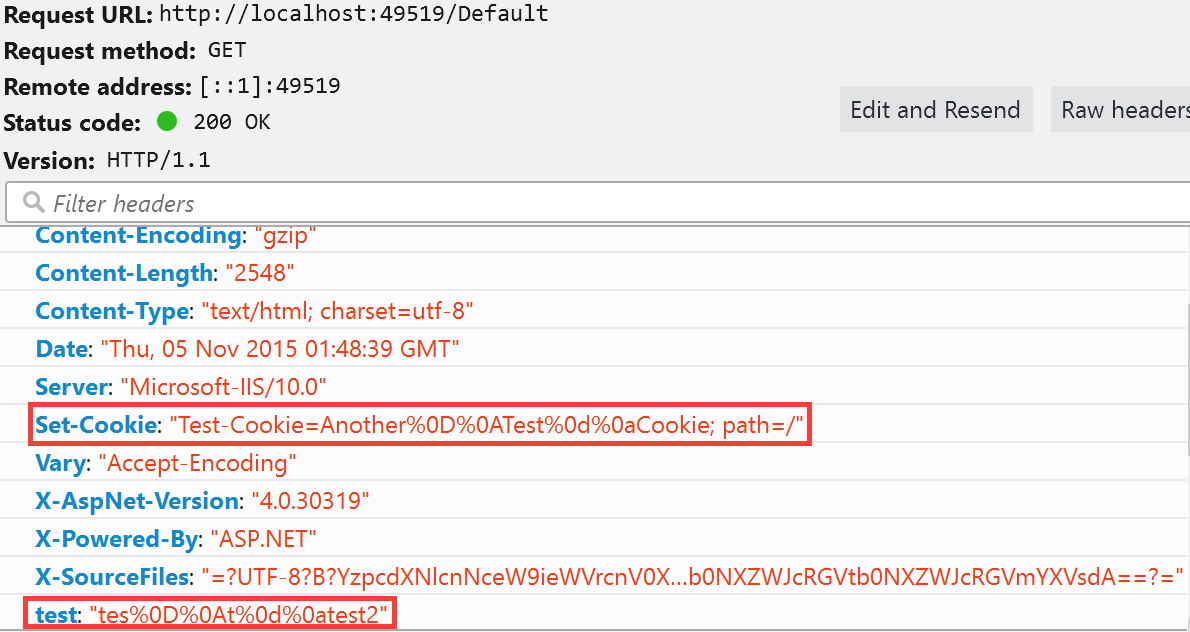

ASP.Net has a built in defense mechanism that is enabled by default called EnableHeaderChecking. When EnableHeaderChecking is enabled, CRLF characters are converted to %0D%0A and the browser does not recognize it as a new line. Instead, it is just displayed as characters, not actually creating a line break. The following code snippet was created to show how the response headers look when adding CRLF into a header.

public partial class _Default : Page

{

protected void Page_Load(object sender, EventArgs e)

{

Response.AppendHeader("test", "tes%0D%0At\r\ntest2");

Response.Cookies.Add(new HttpCookie("Test-Cookie", "Another%0D%0ATest\r\nCookie"));

}

}

When the application runs, it will properly encode the CRLF as shown in the image below.

My next step was to disable EnableHeaderChecking in the web.config file.

<httpRuntime targetFramework="4.6" enableHeaderChecking="false"/>

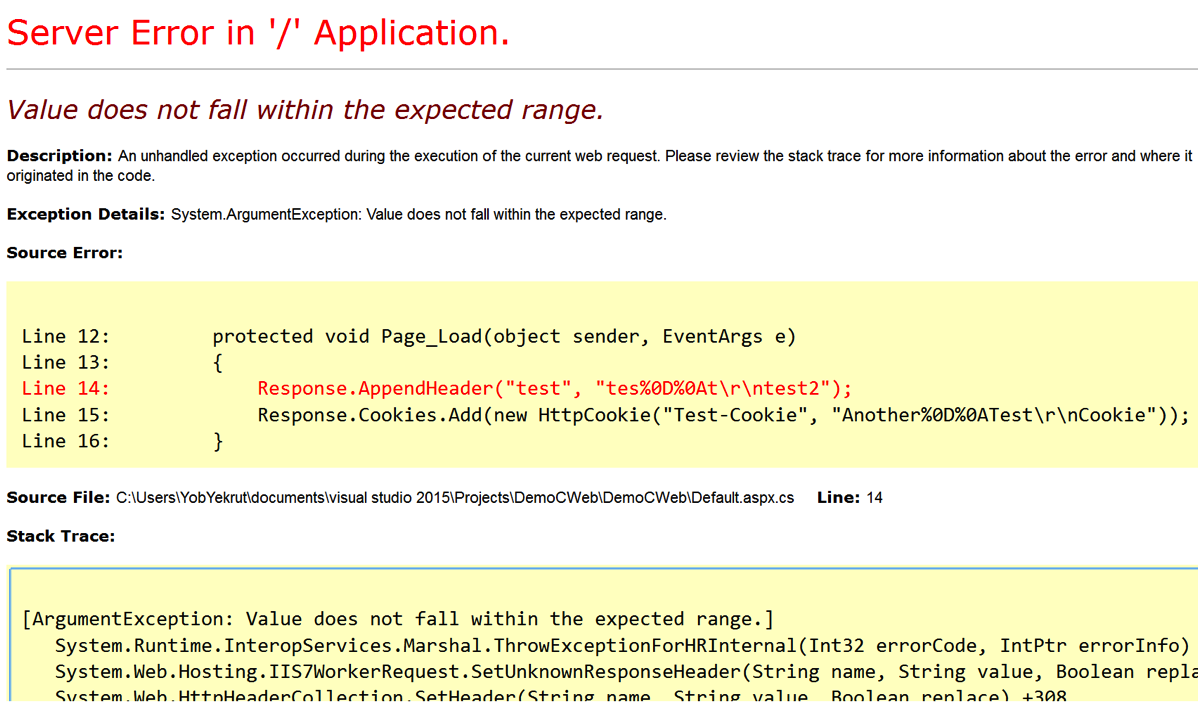

My expectation was that I would get a response that allowed the CRLF and would show me a line break in the response headers. To my surprise, I got the error message below:

So why the error? After doing a little Googling I found an article about ASP.Net 2.0 Breaking Changes on IIS 7.0. The item of interest is “13. IIS always rejects new lines in response headers (even if ASP.NET enableHeaderChecking is set to false)”

I didn’t realize this change had been implemented, but apparently, if using IIS 7.0 or above, ASP.Net won’t allow newline characters in a response header. This is actually good news as there is very little reason to allow new lines in a response header when if that was required, just create a new header. This is a great mitigation and with the default configuration helps protect ASP.Net applications from Response Splitting and HTTP Header Injection attacks.

Understanding the framework that you use and the server it runs on is critical in fully understanding your security risks. These built in features can really help protect an application from security risks. Happy and Secure coding!

I did a podcast on this topic which you can find on the DevelopSec podcast.

Securing The .Net Cookies

Filed under: Development, Security

I remember years ago when we talked about cookie poisoning, the act of modifying cookies to get the application to act differently. An example was the classic cookie used to indicate a user’s role in the system. Often times it would contain 1 for Admin or 2 for Manager, etc. Change the cookie value and all of a sudden you were the new admin on the block. You really don’t hear the phrase cookie poisoning anymore, I guess it was too dark.

There are still security risks around the cookies that we use in our application. I want to highlight 2 key attributes that help protect the cookies for your .Net application: Secure and httpOnly.

Secure Flag

The secure flag tells the browser that the cookie should only be sent to the server if the connection is using the HTTPS protocol. Ultimately this is indicating that the cookie must be sent over an encrypted channel, rather than over HTTP which is plain text.

HttpOnly Flag

The httpOnly flag tells the browser that the cookie should only be accessed to be sent to the server with a request, not by client-side scripts like JavaScript. This attribute helps protect the cookie from being stolen through cross-site scripting flaws.

Setting The Attributes

There are multiple ways to set these attributes of a cookie. Things get a little confusing when talking about session cookies or the forms authentication cookie, but I will cover that as I go. The easiest way to set these flags for all developer created cookies is through the web.config file. The following snippet shows the httpCookies element in the web.config.

<system.web>

<authentication mode="None" />

<compilation targetframework="4.6" debug="true" />

<httpruntime targetframework="4.6" />

<httpcookies httponlycookies="true" requiressl="true" />

</system.web>

As you can see, you can set httponlycookies to true to se the httpOnly flag on all of the cookies. In addition, the requiressl setting sets the secure flag on all of the cookies with a few exceptions.

Some Exceptions

I stated earlier there are a few exceptions to the cookie configuration. The first I will discuss is the session cookie. The session cookie in ASP.Net is defaulted/hard-coded to set the httpOnly attribute. This should override any value set in the httpCookies element in the web.config. The session cookie does not default to requireSSL and setting that value in the httpCookies element as shown above should work just find for it.

The forms authentication cookie is another exception to the rules. Like the session cookie, it is hard-coded to httpOnly. The Forms element of the web.config has a requireSSL attribute that will override what is found in the httpCookies element. Simply put, if you don’t set requiressl=’true’ in the Forms element then the cookie will not have the secure flag even if requiressl=’true’ in the httpCookies element.

This is actually a good thing, even though it might not seem so yet. Here is the next thing about that Forms requireSSL setting.. When you set it, it will require that the web server is using a secure connection. Seems like common sense, but imagine a web farm where the load balancers offload SSL. In this case, while your web app uses HTTPS from client to server, in reality, the HTTPS stops at the load balancer and is then HTTP to the web server. This will throw an exception in your application.

I am not sure why Microsoft decided to make the decision to actually check this value, since the secure flag is a direction for the browser not the server. If you are in this situation you can still set the secure flag, you just need to do it a little differently. One option is to use your load balancer to set the flag when it sends any responses. Not all devices may support this so check with your vendor. The other option is to programmatically set the flag right before the response is sent to the user. The basic process is to find the cookie and just sent the .Secure property to ‘True’.

Final Thoughts

While there are other security concerns around cookies, I see the secure and httpOnly flag commonly misconfigured. While it does not seem like much, these flags go a long way to helping protect your application. ASP.Net has done some tricky configuration of how this works depending on the cookie, so hopefully this helps sort some of it out. If you have questions, please don’t hesitate to contact me. I will be putting together something a little more formal to hopefully clear this up a bit more in the near future.

ASP.Net Insufficient Session Timeout

Filed under: Development, Security, Testing

A common security concern found in ASP.Net applications is Insufficient Session Timeout. In this article, the focus is not on the ASP.Net session that is not effectively terminated, but rather the forms authentication cookie that is still valid after logout.

How to Test

- User is currently logged into the application.

- User captures the ASPAuth cookie (name may be different in different applications).

- Cookie can be captured using a browser plugin or a proxy used for request interception.

- User saves the captured cookie for later use.

- User logs out of the application.

- User requests a page on the application, passing the previously captured authentication cookie.

- The page is processed and access is granted.

Typical Logout Options

- The application calls FormsAuthentication.Signout()

- The application sets the Cookie.Expires property to a previous DateTime.

Cookie Still Works!!

Following the user process above, the cookie still provides access to the application as if the logout never occurred. So what is the deal? The key is that unlike a true “session” which is maintained on the server, the forms authentication cookie is self contained. It does not have a server side component to stay in sync with. Among other things, the authentication cookie has your username or ID, possibly roles, and an expiration date. When the cookie is received by the server it will be decrypted (please tell me you are using protection = all) and the data extracted. If the cookie’s internal expiration date has not passed, the cookie is accepted and processed as a valid cookie.

So what did FormsAuthentation.Signout() do?

If you look under the hood of the .Net framework, it has been a few years but I doubt much has changed, you will see that FormsAuthentication.Signout() really just removes the cookie from the browser. There is no code to perform any server function, it merely asks the browser to remove it by clearing the value and back-dating the expires property. While this does work to remove the cookie from the browser, it doesn’t have any effect on a copy of the original cookie you may have captured. The only sure way to really make the cookie inactive (before the internal timeout occurs) would be to change your machine key in the web.config file. This is not a reasonable solution.

Possible Mitigations

You should be protecting your cookie by setting the httpOnly and Secure properties. HttpOnly tells the browser not to allow javascript to have access to the cookie value. This is an important step to protect the cookie from theft via cross-site scripting. The secure flag tells the browser to only send the authentication cookie over HTTPS, making it much more difficult for an attacker to intercept the cookie as it is sent to the server.

Set a short timeout (15 minutes) on the cookie to decrease the window an attacker has to obtain the cookie.

You could attempt to build a tracking system to manage the authentication cookie on the server to disable it before its time has expired. Maybe something for another post.

Understand how the application is used to determine how risky this issue may be. If the application is not used on shared/public systems and the cookie is protected as mentioned above, the attack surface is significantly decreased.

Final Thoughts

If you are facing this type of finding and it is a forms authentication cookie issue, not the Asp.Net session cookie, take the time to understand the risk. Make sure you understand the settings you have and the priority and sensitivity of the application to properly understand “your” risk level. Don’t rely on third party risk ratings to determine how serious the flaw is. In many situations, this may be a low priority, however in the right app, this could be a high priority.

Static Analysis: Analyzing the Options

Filed under: Development, Security, Testing

When it comes to automated testing for applications there are two main types: Dynamic and Static.

- Dynamic scanning is where the scanner is analyzing the application in a running state. This method doesn’t have access to the source code or the binary itself, but is able to see how things function during runtime.

- Static analysis is where the scanner is looking at the source code or the binary output of the application. While this type of analysis doesn’t see the code as it is running, it has the ability to trace how data flows the the application down to the function level.

An important component to any secure development workflow, dynamic scanning analyzes a system as it is running. Before the application is running the focus is shifted to the source code which is where static analysis fits in. At this state it is possible to identify many common vulnerabilities while integrating into your build processes.

If you are thinking about adding static analysis to your process there are a few things to think about. Keep in mind there is not just one factor that should be the decision maker. Budget, in-house experience, application type and other factors will combine to make the right decision.

Disclaimer: I don’t endorse any products I talk about here. I do have direct experience with the ones I mention and that is why they are mentioned. I prefer not to speak to those products I have never used.

Budget

I hate to list this first, but honestly it is a pretty big factor in your implementation of static analysis. The vast options that exist for static analysis range from FREE to VERY EXPENSIVE. It is good to have an idea of what type of budget you have at hand to better understand what option may be right.

Free Tools

There are a few free tools out there that may work for your situation. Most of these tools depend on the programming language you use, unlike many of the commercial tools that support many of the common languages. For .Net developers, CAT.Net is the first static analysis tool that comes to mind. The downside is that it has not been updated in a long time. While it may still help a little, it will not compare to many of the commercial tools that are available.

In the Ruby world, I have used Brakeman which worked fairly well. You may find you have to do a little fiddling to get it up and running properly, but if you are a Ruby developer then this may be a simple task.

Managed Services or In-House

Can you manage a scanner in-house or is this something better delegated to a third party that specializes in the technology?

This can be a difficult question because it may involve many facets of your development environment. Choosing to host the solution in-house, like HP’s Fortify SCA may require a lot more internal knowledge than a managed solution. Do you have the resources available that know the product or that can learn it? Given the right resources, in-house tools can be very beneficial. One of the biggest roadblocks to in-house solutions is related to the cost. Most of them are very expensive. Here are a few in-house benefits:

- Ability to integrate directly into your Continuous Integration (CI) operations

- Ability to customize the technology for your environment/workflow

- Ability to create extensions to tune the results

Choosing to go with a managed solution works well for many companies. Whether it is because the development team is small, resources aren’t available or budget, using a 3rd party may be the right solution. There is always the question as to whether or not you are ok with sending your code to a 3rd party or not, but many are ok with this to get the solution they need. Many of the managed services have the additional benefit of reducing false positives in the results. This can be one of the most time consuming pieces of a static analysis tool, right there with getting it set up and configured properly. Some scans may return upwards of 10’s of thousands of results. Weeding through all of those can be very time consuming and have a negative effect on the poor person stuck doing it. Having a company manage that portion can be very beneficial and cost effective.

Conclusion

Picking the right static analysis solution is important, but can be difficult. Take the time to determine what your end goal is when implementing static analysis. Are you looking for something that is good, but not customizable to your environment, or something that is highly extensible and integrated closely with your workflow? Unfortunately, sometimes our budget may limit what we can do, but we have to start someplace. Take the time to talk to other people that have used the solutions you are looking at. Has their experience been good? What did/do they like? What don’t they like? Remember that static analysis is not the complete solution, but rather a component of a solution. Dropping this into your workflow won’t make you secure, but it will help decrease the attack surface area if implemented properly.

A Pen Test is Coming!!

Filed under: Development, Security, Testing

You have been working hard to create the greatest app in the world. Ok, so maybe it is just a simple business application, but it is still important to you. You have put countless hours of hard work into creating this master piece. It looks awesome, and does everything that the business has asked for. Then you get the email from security: Your application will undergo a penetration test in two weeks. Your heart skips a beat and sinks a little as you recall everything you have heard about this experience. Most likely, your immediate action is to go on the defensive. Why would your application need a penetration test? Of course it is secure, we do use HTTPS. No one would attack us, we are small. Take a breath.. it is going to be alright.

All too often, when I go into a penetration test, the developers start on the defensive. They don’t really understand why these ‘other’ people have to come in and test their application. I understand the concerns. History has shown that many of these engagements are truly considered adversarial. The testers jump for joy when they find a security flaw. They tell you how bad the application is and how simple the fix is, leading to you feeling about the size of an ant. This is often due to a lack of good communication skills.

Penetration testing is adversarial. It is an offensive assessment to find security weaknesses in your systems. This is an attempt to simulate an attacker against your system. Of course there are many differences, such as scope, timing and rules, but the goal is the same. Lets see what we can do on your system. Unfortunately, I find that many testers don’t have the communication skills to relay the information back to the business and developers in a way that is positive. I can’t tell you how may times I have heard people describe their job as great because they get to come in, tell you how bad you suck and then leave. If that is your penetration tester, find a new one. First, that attitude breaks down the communication with the client and doesn’t help promote a secure atmosphere. We don’t get anywhere by belittling the teams that have worked hard to create their application. Second, a penetration test should provide solid recommendations to the client on how they can work to resolve the issues identified. Just listing a bunch of flaws is fairly useless to a company.

These engagements should be worth everyone’s time. There should be positive communication between the developers and the testing team. Remember that many engagements are short lived so the more information you can provide the better the assessment you are going to get. The engagement should be helpful. With the right company, you will get a solid assessment and recommendations that you can do something with. If you don’t get that, time to start looking at another company for testing. Make sure you are ready for the test. If the engagement requires an environment to test in, have it all set up. That includes test data (if needed). The testers want to hit the ground running. If credentials are needed, make sure those are available too. The more help you can be, the more you will benefit from the experience.

As much as you don’t want to hear it, there is a very high chance the test will find vulnerabilities. While it would be great if applications didn’t have vulnerabilities, it is fairly rare to find them. Use this experience to learn and train on security issues. Take the feedback as constructive criticism, not someone attacking you. Trust me, you want the pen testers to find these flaws before a real attacker does.

Remember that this is for your benefit. We as developers also need to stay positive. The last thing you want to do is challenge the pen testers saying your app is not vulnerable. The teams that usually do that are the most vulnerable. Say positive and it will be a great learning experience.

Application Logging: The Next Great Wonder

Filed under: Security

What type of logging do you perform in your applications? Do you just log exceptions? Many places I have worked and many developers I have talked to over the years mostly focus on logging troubleshooting artifacts. Where did the application break, and what may have caused it. We do this because we want to be able to fix the bugs that may crop up that cause our users difficulty in using the application.

While this makes sense to many developers, because it is directly related to the pain the face in troubleshooting, it leaves a lot to be desired. When we think about a security perspective, there is much more that should be considered. The simplest events are successful and unsuccessful authentication attempts. Many developers will say they log the first, but the latter is usually overlooked. In reality, the failed attempts are logged most likely to help with account lockout and don’t server much other purpose. But they do. Those logs can be used to identify brute force attacks against a user’s account.

Other events that are critical include logoff events, password change events and even the access of sensitive data. Not many days go buy that we don’t see word of a breach of data. If your application accesses sensitive data, how do you know who has looked at it? If records are meant to be viewed one at a time, but someone starts pulling hundreds at a time, would you notice? If a breach occurs, are you able to go back into the logs and show what data has been viewed and by who?

Logging and auditing play a critical role in an application and finding the right balance of data stored is somewhat an art. Some people may say that you need to just grab everything. That doesn’t always work. Performance seems to be the first concern that comes to mind. I didn’t say it would be easy to throw a logging plan together.

You have to understand your application and the business that it supports. Information and events that are important to one business may not be as important in another business. That is ok. This isn’t a one-size-fits-all solution. Take the time to analyze your situation and log what feels right. But more thought into it than just troubleshooting. Think about if a breach occurs how you will use that stored data.

In addition to logging the data, there needs to be a plan in place to look at that data. Whether it is an automated tool, or manual (hopefully a mix of the two) you can’t identify something if you don’t look. All too often we see breaches occur and not be noticed for months or even years afterward. In many of these cases if someone had just been looking at the logs, it would have been identified immediately and the risk of the breach could be minimized.

There are tools out there to help with logging in your application, no matter what your platform of choice is. Logging is not usually a bolt on solution, you have to be thinking about it before you build your application. Take the time up front to do this so when something happens, you have all the data you need to protect yourself and your customers.