What is the difference between encryption and hashing?

Filed under: Development, Security

Encryption is a reversible process, whereas hashing is one-way only. Data that has been encrypted can be decrypted back to the original value. Data that has been hashed cannot be transformed back to its original value.

Encryption is used to protect sensitive information like Social Security Numbers, credit card numbers or other sensitive information that may need to be accessed at some point.

Hashing is used to create data signatures or comparison only features. For example, user passwords used for login should be hashed because the program doesn’t need to store the actual password. When the user attempts to log in, the system will generate a hash of the supplied password using the same technique as the one stored and compare them. If they match, the passwords are the same.

Another example scenario with hashing is with file downloads to verify integrity. The supplier of the file will create a hash of the file on the server so when you download the file you can then generate the hash locally and compare them to make sure the file is correct.

XmlSecureResolver: XXE in .Net

Filed under: Development, Security, Testing

tl;dr

- Microsoft .Net 4.5.2 and above protect against XXE by default.

- It is possible to become vulnerable by explicitly setting a XmlUrlResolver on an XmlDocument.

- A secure alternative is to use the XmlSecureResolver object which can limit allowed domains.

- XmlSecureResolver appeared to work correctly in .Net 4.X, but did not appear to work in .Net 6.

I wrote about XXE in .net a few years ago (https://www.jardinesoftware.net/2016/05/26/xxe-and-net/) and I recently starting doing some more research into how it works with the later versions. At the time, the focus was just on versions prior to 4.5.2 and then those after it. At that time there wasn’t a lot after it. Now we have a few versions that have appeared and I started looking at some examples.

I stumbled across the XmlSecureResolver class. It peaked my curiosity, so I started to figure out how it worked. I started with the following code snippet. Note that this was targeting .Net 6.0.

static void Load()

{

string xml = "<?xml version='1.0' encoding='UTF-8' ?><!DOCTYPE foo [<!ENTITY xxe SYSTEM 'https://labs.developsec.com/test/xxe_test.php'>]><root><doc>&xxe;</doc><foo>Test</foo></root>";

XmlDocument xmlDoc = new XmlDocument();

xmlDoc.XmlResolver = new XmlSecureResolver(new XmlUrlResolver(), "https://www.jardinesoftware.com");

xmlDoc.LoadXml(xml);

Console.WriteLine(xmlDoc.InnerText);

Console.ReadLine();

}So what is the expectation?

- At the top of the code, we are setting some very simple XML

- The XML contains an Entity that is attempting to pull data from the labs.developsec.com domain.

- The xxe_test.php file simply returns “test from the web..”

- Next, we create an XmlDocument to parse the xml variable.

- By Default (after .net 4.5.1), the XMLDocument does not parse DTDs (or external entities).

- To Allow this, we are going to set the XmlResolver. In This case, we are using the XmlSecureResolver assuming it will help limit the entities that are allowed to be parsed.

- I will set the XmlSecureResolver to a new XmlUrlResolver.

- I will also set the permission set to limit entities to the jardinesoftware.com domain.

- Finally, we load the xml and print out the result.

My assumption was that I should get an error because the URL passed into the XmlSecureResolver constructor does not match the URL that is referenced in the xml string’s entity definition.

https://www.jardinesoftware.com != https://labs.developsec.com

Can you guess what result I received?

If you guessed that it worked just fine and the entity was parsed, you guessed correct. But why? The whole point of XMLSecureResolver is to be able to limit this to only entities from the allowed domain.

I went back and forth for a few hours trying different configurations. Accessing different files from different locations. It worked every time. What was going on?

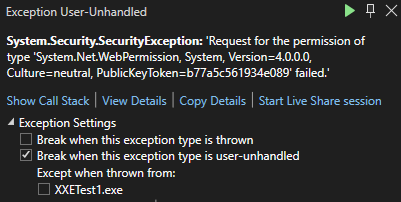

I then decided to switch from my Mac over to my Windows system. I loaded up Visual Studio 2022 and pulled up my old project from the old blog post. The difference? This project was targeting the .Net 4.8 framework. I ran the exact same code in the above snippet and sure enough, I received an error. I did not have permission to access that domain.

Finally, success. But I wasn’t satisfied. Why was it not working on my Mac. So I added the .Net 6 SDK to my Windows system and changed the target. Sure enough, no exception was thrown and everything worked fine. The entity was parsed.

Why is this important?

The risk around parsing XML like this is a vulnerability called XML External Entities (XXE). The short story here is that it allows a malicious user to supply an XML document that references external files and, if parsed, could allow the attacker to read the content of those files. There is more to this vulnerability, but that is the simple overview.

Since .net 4.5.2, the XmlDocument object was protected from this vulnerability by default because it sets the XmlResolver to null. This blocks the parser from parsing any external entities. The concern here is that a user may have decided they needed to allow entities from a specific domain and decided to use the XmlSecureResolver to do it. While that seemed to work in the 4.X versions of .Net, it seems to not work in .Net 6. This can be an issue if you thought you were good and then upgraded and didn’t realize that the functionality changed.

Conclusion

If you are using the XmlSecureResolver within your .Net application, make sure that it is working as you expect. Like many things with Microsoft .Net, everything can change depending on the version you are running. In my test cases, .Net 4.X seemed to work properly with this object. However, .Net 6 didn’t seem to respect the object at all, allowing DTD parsing when it was unexpected.

I did not opt to load every version of .Net to see when this changed. It is just another example where we have to be conscious of the security choices we make. It could very well be this is a bug in the platform or that they are moving away from using this an a way to allow specific domains. In either event, I recommend checking to see if you are using this and verifying if it is working as expected.

Input Validation for Security

Filed under: Development, Security

Validating input is an important step for reducing risk to our applications. It might not eliminate the risk, and for that reason we should consider what exactly we are doing with input validation.

Should you be looking for every attack possible?

Should you create a list of every known malicious payload?

When you think about input validation are you focusing on things like Cross-site Scripting, SQL Injection, or XXE, just to name a few? How feasible is it to attempt to block all these different vulnerabilities with input validation? Are each of these even a concern for your application?

I think that input validation is important. I think it can help reduce the ability for many of these vulnerabilities. I also think that our expectation should be aligned with what we can be doing with input validation. We shouldn’t overlook these vulnerabilities, but instead realize appropriate limitations. All of these vulnerabilities have a counterpart, such as escaping, output encoding, parser configurations, etc. that will complete the appropriate mitigation.

If we can’t, or shouldn’t, block all vulnerabilities with input validation, what should we focus on?

Start with focusing on what is acceptable data. It might be counter-intuitive, but malicious data could be acceptable data from a data requirements statement. We define our acceptable data with specific constraints. These typically fall under the following categories:

* Range – What are the bounds of the data? ex. Age can only be between 0 and 150.

* Type – What type of data is it? Integer, Datetime, String.

* Length – How many characters should be allow?

* Format – Is there a specific format? Ie. SSN or Account Number

As noted, these are not specifically targeting any vulnerability. They are narrowing the capabilities. If you verify that a value is an Integer, it is hard to get typical injection exploits. This is similar to custom format requirements. Limiting the length also restricts malicious payloads. A state abbreviation field with a length of 2 is much more difficult to exploit.

The most difficult type is the string. Here you may have more complexity and depending on your purpose, might actually have more specific attacks you might look for. Maybe you allow some HTML or markup in the field. In that case, you may have more advanced input validation to remove malicious HTML or events.

There is nothing wrong with using libraries that will help look for malicious attack payloads during your input validation. However, the point here is to not spend so much time focusing on blocking EVERYTHING when that is not necessary to get the product moving forward. Understand the limitation of that input validation and ensure that the complimenting controls like output encoding are properly engaged where they need to be.

The final point I want to make on input validation is where it should happen. There are two options: on the client, or on the server. Client validation is used for immediate feedback to the user, but it should never be used for security.

It is too easy to bypass client-side validation routines, so all validation should also be checked on the server. The user doesn’t have the ability to bypass controls once the data is on the server. Be careful with how you try to validate things on the client directly.

Like anything we do with security, understand the context and reasoning behind the control. Don’t get so caught up in trying to block every single attack that you never release. There is a good chance something will get through your input validation. That is why it is important to have other controls in place at the point of impact. Input validation limits the amount of bad traffic that can get to the important functions, but the functions still may need to do additional processes to be truly secure.

Chrome is making some changes… Are you Ready?

Filed under: Development, Security

Last year, Chrome announced that it was making a change to default cookies to SameSite:Lax if there is no SameSite setting explicitly set. I wrote about this change last year (https://www.jardinesoftware.net/2019/10/28/samesite-by-default-in-2020/). This change could have an impact on some sites, so it is important that you test this out. The changes are supposed to start rolling out in February (this month). The linked post shows how to force these defaults in both FireFox and Chrome.

In addition to this, Chrome has announced that it is going to start blocking mixed-content downloads (https://blog.chromium.org/2020/02/protecting-users-from-insecure.html). In this case, they are starting in Chrome 83 (June 2020) with blocking executable file downloads (.exe, .apk) that are over HTTP but requested from an HTTPS site.

The issue at hand is that users are mislead into thinking the download is secure due to the requesting page indicating it is over HTTPS. There isn’t a way for them to clearly see that the request is insecure. The linked Chrome blog describes a timeline of how they will slowly block all mixed-content types.

For many sites this might not be a huge concern, but this is a good time to check your sites to determine if you have any type of mixed content and ways to mitigate this.

You can identify mixed content on your site by using the Javascript Console. It can be found under the Developer Tools in your browser. This will prompt a warning when it identifies mixed content. There may also be some scanners you can use that will crawl your site looking for mixed content.

To help mitigate this from a high level, you could implement CSP to upgrade insecure requests:

Content-Security-Policy: upgrade-insecure-requests

This can help by upgrading insecure requests, but it is not supported in all browsers. The following post goes into a lot of detail on mixed content and some ways to resolve it: https://developers.google.com/web/fundamentals/security/prevent-mixed-content/fixing-mixed-content

The increase in protections of the browsers can help reduce the overall threats, but always remember that it is the developer’s responsibility to implement the proper design and protections. Not all browsers are the same and you can’t rely on the browser to provide all the protections.

SameSite By Default in 2020?

Filed under: Development, Security, Testing

If you haven’t seen, Cross Site Request Forgery (CSRF) is getting a big protection by default in 2020. Currently, most protections need to be implemented explicitly. While we are seeing some nonces included and checked by default (Razor Pages), you typically still need to explicitly check the nonce. This requires that the developers understand that CSRF is a risk and how to prevent it. They then need to implement a mitigating solution.

Background

CSRF has been around for a long time. For those that don’t know, it is a vulnerability that allows an attacker to forge requests to your application that the user doesn’t initiate. Imagine being able to get a user to transfer money using a request like https://yourbank.com/transfer/300. They place this request on another site in an image tag. When the image attempts to load, it sends the request to the other bank site. Assuming the bank site uses cookies for authentication and session management, these cookies are sent with the request and the transfer is made (if the user was logged into their bank account).

The most common mitigation was to include a nonce with each request. This made the request to transfer money unique for every user. This is effective, but does require that the developer add the nonce and validate it on the request. A few years back, the browsers started adding support for the samesite attribute. This has the advantage of being set on the cookies instead of every request. The idea behind samesite is that a cookie will not be sent if the requesting domain is different than the destination domain. In our example above, if mybank.com has an image tag set to yourbank.com, the browser will not send the cookies for yourbank.com with the request.

Today

Fortunately, we are at a time when most browsers have support for samesite, but it does require that the developer set the appropriate setting. Like implementing a nonce, this is put on the developer to take an explicit action. The adoption of samesite is gaining. Frameworks like .Net Core set this for identity cookies to lax by default.

2020

Chrome has announced that in 2020, Chrome 80 will set the samesite flag to lax for all cookies by default. (https://blog.chromium.org/2019/10/developers-get-ready-for-new.html) This is good news, as it will help take a huge dent out of cross-site request forgery. Of course, that only means if you are using Chrome as a browser. I am sure that Mozilla and Microsoft will follow suit, but there is no mention of a timeline to when that will happen. So is CSRF dead, no. It has taken a strong blow though.

But wait, it is just set to lax.. what does that mean? There are two settings for samesite: strict and lax. Lax, as its name implies is a little more forgiving. For the most part, it is good enough coverage if you follow your basic guidelines (Don’t use GET for making changes to your system). However, if you do use GET requests, you still have a risk. Remember that example earlier https://yourbank.com/transfer/300? This is using a GET request. with Lax, an attacker can put that link in a link tag on their site, rather than an image tag. Now, if the user clicks the link, it will open it as the top level request and will still send the cookies. This is that difference between strict and lax. Strict would not allow the cookies to be sent in this scenario.

What does this mean?

At this point, this change means you should be checking your current applications to see if you have any type of cross-site requests that need to send cookies to work. If these exist, you will need to take action to turn samesite off or make other accommodations. If you find that samesite will be a problem for your setup, you can turn it off by setting samesite: none. This does require that the cookie is set to secure.

If your application doesn’t use cross-site requests, you still should take action. Remember, this only defaults in Chrome. So if your users are using anything else, this change doesn’t effect them yet. They will still be vulnerable if you are not implementing other CSRF mitigations.

Making the Change in FireFox Now

FireFox does have the ability to enable this behavior in the about:config. Starting in FireFox 69, you can modify the following preferences:

- network.cookie.sameSite.laxByDefault

- network.cookie.sameSite.noneRequiresSecure

These are both set to false by default, but a user can change them to true. Note that this is a user setting and not one that you can force your users to set. It is still recommended to set the samesite attribute through your application.

Making the Change in Chrome Now

Chrome has the ability to enable this behavior in chrome://flags. There are two settings:

- SameSite by default cookies

- Cookies without SameSite must be secure

These are currently both set false by default, but you can change them too true.

Be Careful

As a user, making these changes can add a layer of protection, but it can also break some sites you may use. Be careful when enabling these since it may render some sites unreliable.

The end of Request Validation

Filed under: Development, Security

One of the often overlooked features of ASP.Net applications was request validation. If you are a .Net web developer, you have probably seen this before. I have certainly covered it on multiple occasions on this site. The main goal: help reduce XSS type input from being supplied by the user. .Net Core has opted to not bring this feature along and has dropped it with no hope in sight.

Request Validation Limitations

Request validation was a nice to have.. a small extra layer of protection. It wasn’t fool proof and certainly had more limitations than originally expected. The biggest one was that it only supported the HTML context for cross-site scripting. Basically, it was trying to deter the submission of HTML tags or elements from end users. Cross-site scripting is context sensitive meaning that attribute, URL, Javascript, and CSS based contexts were not considered.

In addition, there have been identified bypasses for request validation over the years. For example, using unicode-wide characters and then storing the data ASCII format could allow bypassing the validation.

Input validation is only a part of the solution for cross-site scripting. The ultimate end-state is the use of output encoding of the data sent back to the browser. Why? Not all data is guaranteed to go through our expected inputs. Remember, we don’t trust the database.

False Sense of Security?

Some have argued that the feature did more harm than good. It created a false sense of security compared to what it could do. While I don’t completely agree with that, I have seen those examples. I have seen developers assume they were protected just because request validation was enabled. Unfortunately, this is a bad assumption based on a mis-understanding of the feature. The truth is that it is not a feature meant to stop all cross-site scripting. The goal was to create a way to provide some default input validation for a specific vulnerability. Maybe it was mis-understood. Maybe it was a great idea with impossible implementation. In either case, it was a small piece of the puzzle.

So What Now?

So moving forward with Core, request validation is out of the picture. There is nothing we can do about that from a framework perspective. Maybe we don’t have to. There may be people that create this same functionality in 3rd party packages. That may work, it may not. Now is our opportunity to make sure we understand the flaws and proper protection mechanisms. When it comes to Cross-site scripting, there are a lot of techniques we can use to reduce the risk. Obviously I rely on output encoding as the biggest and first step. There are also things like content security policy or other response headers that can help add layers of protection. I talk about a few of these options in my new course “Security Fundamentals for Application Teams“.

Remember that understanding your framework is critical in helping reduce security risks in your application. If you are making the switch to .Net core, keep in mind that not all the features you may be used to exist. Understand these changes so you don’t get bit.

Security Tips for Copy/Paste of Code From the Internet

Filed under: Development, Security

Developing applications has long involved using code snippets found through textbooks or on the Internet. Rather than re-invent the wheel, it makes sense to identify existing code that helps solve a problem. It may also help speed up the development time.

Years ago, maybe 12, I remember a co-worker that had a SQL Injection vulnerability in his application. The culprit, code copied from someone else. At the time, I explained that once you copy code into your application it is now your responsibility.

Here, 12 years later, I still see this type of occurrence. Using code snippets directly from the web in the application. In many of these cases there may be some form of security weakness. How often do we, as developers, really analyze and understand all the details of the code that we copy?

Here are a few tips when working with external code brought into your application.

Understand what it does

If you were looking for code snippets, you should have a good idea of what the code will do. Better yet, you probably have an understanding of what you think that code will do. How vigorously do you inspect it to make sure that is all it does. Maybe the code performs the specific task you were set out to complete, but what happens if there are other functions you weren’t even looking for. This may not be as much a concern with very small snippets. However, with larger sections of code, it could coverup other functionality. This doesn’t mean that the functionality is intentionally malicious. But undocumented, unintended functionality may open up risk to the application.

Change any passwords or secrets

Depending on the code that you are searching, there may be secrets within it. For example, encryption routines are common for being grabbed off the Internet. To be complete, they contain hard-coded IVs and keys. These should be changed when imported into your projects to something unique. This could also be the case for code that has passwords or other hard-coded values that may provide access to the system.

As I was writing this, I noticed a post about the RadAsyncUpload control regarding the defaults within it. While this is not code copy/pasted from the Internet, it highlights the need to understand the default configurations and that some values should be changed to help provide better protections.

Look for potential vulnerabilities

In addition to the above concerns, the code may have vulnerabilities in it. Imagine a snippet of code used to select data from a SQL database. What if that code passed your tests of accurately pulling the queries, but uses inline SQL and is vulnerable to SQL Injection. The same could happen for code vulnerable to Cross-Site Scripting or not checking proper authorization.

We have to do a better job of performing code reviews on these external snippets, just as we should be doing it on our custom written internal code. Finding snippets of code that perform our needed functionality can be a huge benefit, but we can’t just assume it is production ready. If you are using this type of code, take the time to understand it and review it for potential issues. Don’t stop at just verifying the functionality. Take steps to vet the code just as you would any other code within your application.

Securing The .Net Cookies

Filed under: Development, Security

I remember years ago when we talked about cookie poisoning, the act of modifying cookies to get the application to act differently. An example was the classic cookie used to indicate a user’s role in the system. Often times it would contain 1 for Admin or 2 for Manager, etc. Change the cookie value and all of a sudden you were the new admin on the block. You really don’t hear the phrase cookie poisoning anymore, I guess it was too dark.

There are still security risks around the cookies that we use in our application. I want to highlight 2 key attributes that help protect the cookies for your .Net application: Secure and httpOnly.

Secure Flag

The secure flag tells the browser that the cookie should only be sent to the server if the connection is using the HTTPS protocol. Ultimately this is indicating that the cookie must be sent over an encrypted channel, rather than over HTTP which is plain text.

HttpOnly Flag

The httpOnly flag tells the browser that the cookie should only be accessed to be sent to the server with a request, not by client-side scripts like JavaScript. This attribute helps protect the cookie from being stolen through cross-site scripting flaws.

Setting The Attributes

There are multiple ways to set these attributes of a cookie. Things get a little confusing when talking about session cookies or the forms authentication cookie, but I will cover that as I go. The easiest way to set these flags for all developer created cookies is through the web.config file. The following snippet shows the httpCookies element in the web.config.

<system.web>

<authentication mode="None" />

<compilation targetframework="4.6" debug="true" />

<httpruntime targetframework="4.6" />

<httpcookies httponlycookies="true" requiressl="true" />

</system.web>

As you can see, you can set httponlycookies to true to se the httpOnly flag on all of the cookies. In addition, the requiressl setting sets the secure flag on all of the cookies with a few exceptions.

Some Exceptions

I stated earlier there are a few exceptions to the cookie configuration. The first I will discuss is the session cookie. The session cookie in ASP.Net is defaulted/hard-coded to set the httpOnly attribute. This should override any value set in the httpCookies element in the web.config. The session cookie does not default to requireSSL and setting that value in the httpCookies element as shown above should work just find for it.

The forms authentication cookie is another exception to the rules. Like the session cookie, it is hard-coded to httpOnly. The Forms element of the web.config has a requireSSL attribute that will override what is found in the httpCookies element. Simply put, if you don’t set requiressl=’true’ in the Forms element then the cookie will not have the secure flag even if requiressl=’true’ in the httpCookies element.

This is actually a good thing, even though it might not seem so yet. Here is the next thing about that Forms requireSSL setting.. When you set it, it will require that the web server is using a secure connection. Seems like common sense, but imagine a web farm where the load balancers offload SSL. In this case, while your web app uses HTTPS from client to server, in reality, the HTTPS stops at the load balancer and is then HTTP to the web server. This will throw an exception in your application.

I am not sure why Microsoft decided to make the decision to actually check this value, since the secure flag is a direction for the browser not the server. If you are in this situation you can still set the secure flag, you just need to do it a little differently. One option is to use your load balancer to set the flag when it sends any responses. Not all devices may support this so check with your vendor. The other option is to programmatically set the flag right before the response is sent to the user. The basic process is to find the cookie and just sent the .Secure property to ‘True’.

Final Thoughts

While there are other security concerns around cookies, I see the secure and httpOnly flag commonly misconfigured. While it does not seem like much, these flags go a long way to helping protect your application. ASP.Net has done some tricky configuration of how this works depending on the cookie, so hopefully this helps sort some of it out. If you have questions, please don’t hesitate to contact me. I will be putting together something a little more formal to hopefully clear this up a bit more in the near future.

Are Application Security Certifications Worth It?

Filed under: Security

In the IT industry there has always been a debate for and against certifications. This is no different than the age old battle of whether or not a bachelors degree is needed to be good in IT. There are large entities that have made a really good profit off the certification tracks. Not only do you have the people that create the tests, but also all of the testing centers. It is a pretty lucrative business if your cert is popular.

I remember when I first started developing applications there were certifications like the Microsoft Certified series or Sun certifications. Anyone remember doing the BrainBench tests online? The goal was to indicate that you had some base level of knowledge about that technology. This seemed to work for a technology, but so far it doesn’t seem to be catching on in the development world for secure development certifications.

You haven’t heard? There are actually certifications that try to show some expertise in application security. GIAC has a secure coding program for both Java and .Net, both leading to the GSSP certification. ISC2 has the CSSLP certification focused at those that work with developing applications. They don’t feel that wide spread though. Lets look at these two examples.

The GIAC certification focuses mostly on the developer and writing secure code. This is tough because it is a certification for a portion of your job as a developer. Your main goal is writing code so to take the effort to go out and get a certification that is so focused can be deterring, never mind the cost of these certs these days. The other issue is that we are not seeing a wide acceptance in the industry for these certifications. I have not seen many job postings for developers that look for the GSSP, or CSSLP certification or any other secure coding cert. You might see MCP or MCSD, but not security certs. Until we start looking for these in our candidates, there is no reason for developers to take the time to get them.

The ISC2 CSSLP certification is geared less at secure coding, and focused more toward the entire SDLC. This alone may make it even less interesting to a developer to attain because it is not directly related to coding. Sure we are involved in the SDLC, but do we really want some cert that says we are security conscious? I am not saying that certifications are a bad thing. I think they can help show some competence, but there seem to be a lot of barriers to adoption within the developer community with security certifications.

When you look at other security certifications they are more job direct, or encompassing. For example, the Web Application Penetration Tester certifications that are available encompass a role: Web Penetration Tester. In our examples above, there is no GSSP role for a developer.

How do we go about solving the problem? Is there a certification that could actually be broadly adopted in the developer world? Rather than have a separate security certification, should we expect that the other developer certifications would incorporate security? Just because I have the GSSP doesn’t mean I can actually write good programs with no flaws. Would I be more marketable if I had the MCSD and everyone knew that that required secure coding expertise?

Push the major developer certification creators to start requiring more secure coding coverage. We shouldn’t need an extra certification for application security, it should just be a part of what we do every day.

Future of ViewStateMac: What We Know

Filed under: Development, Security, Testing

The .Net Web Development and Tools Blog just recently posted some extra information about ASP.Net December 2013 Security Updates (http://blogs.msdn.com/b/webdev/archive/2013/12/10/asp-net-december-2013-security-updates.aspx).

The most interesting thing to me was a note near the bottom of the page that states that the next version of ASP.Net will FORBID setting ViewStateMac=false. That is right.. They will not allow it in the next version. So in short, if you have set it to false, start working out how to work it to true before you update.

So why forbid it? Apparently, there was a Remote Code Execution flaw identified that can be exploited when ViewStateMac is disabled. They don’t include a lot of details as to how to perform said exploit, but that is neither here nor there. It is about time that something was critical enough that they have decided to take this property out of the developer’s hands.

Over the years I have written many posts discussing attacking ASP.Net sites, many of which rely on ViewStateMac being disabled. I have written to Microsoft regarding how EventValidation can be manipulated if ViewStateMac is disabled. The response was that they think developers should be using the secure settings. I guess that is different now that there is remote code execution. We have reached a new level.

So what does ViewStateMac protect? There are three things that I am aware of that it protects (search this site for any of these and you will find articles with much more detail):

- ViewState – protects this from parameter tampering

- EventValidation – protects this from parameter tampering

- ViewStateUserKey – Used to protect requests from CSRF

So why do developers disable ViewStateMac? Great question. I believe that in most cases, it is disabled because the application is deployed in a web farm and when the web.config is not configured properly, an error is thrown. When some developers search for the error, many forums recommend disabling the ViewStateMac to fix the problem. Unfortunately, that is WRONG!!. Here is a Microsoft KB article that explains in detail how to properly configure a system to allow ViewStateMac to be enabled (http://support.microsoft.com/kb/2915218).

Is this a good thing? For developers, yes!. This will definitely help increase the protection for ViewState, EventValidation and CSRF if ViewStateUserKey is set. For Penetration Testers, Yes/No. Yes, because we get to say you are doing a good job in this category. No, because some easy pickings are going to be wiped off the plate.

I think this is a pretty bold move by Microsoft to remove control over this, but I do think it is a good thing. This is an important control in the WebForm ecosystem and all too often mis-understood by developers. This should bring many sites one step closer to being a little more secure when this change rolls out.